the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Radar imaging with EISCAT 3D

Johann Stamm

Juha Vierinen

Juan M. Urco

Björn Gustavsson

Jorge L. Chau

A new incoherent scatter radar called EISCAT 3D is being constructed in northern Scandinavia. It will have the capability to produce volumetric images of ionospheric plasma parameters using aperture synthesis radar imaging. This study uses the current design of EISCAT 3D to explore the theoretical radar imaging performance when imaging electron density in the E region and compares numerical techniques that could be used in practice. Of all imaging algorithms surveyed, the singular value decomposition with regularization gave the best results and was also found to be the most computationally efficient. The estimated imaging performance indicates that the radar will be capable of detecting features down to approximately 90×90 m at a height of 100 km, which corresponds to a angular resolution. The temporal resolution is dependent on the signal-to-noise ratio and range resolution. The signal-to-noise ratio calculations indicate that high-resolution imaging of auroral precipitation is feasible. For example, with a range resolution of 1500 m, a time resolution of 10 s, and an electron density of , the correlation function estimates for radar scatter from the E region can be measured with an uncertainty of 5 %. At a time resolution of 10 s and an image resolution of 90×90 m, the relative estimation error standard deviation of the image intensity is 10 %. Dividing the transmitting array into multiple independent transmitters to obtain a multiple-input–multiple-output (MIMO) interferometer system is also studied, and this technique is found to increase imaging performance through improved visibility coverage. Although this reduces the signal-to-noise ratio, MIMO has successfully been applied to image strong radar echoes as meteors and polar mesospheric summer echoes. Use of the MIMO technique for incoherent scatter radars (ISRs) should be investigated further.

- Article

(6954 KB) - Full-text XML

- BibTeX

- EndNote

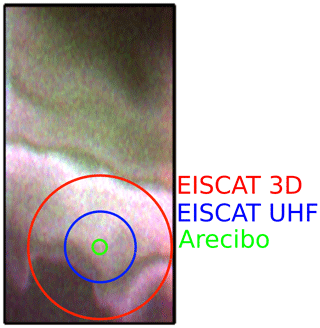

One of the measurement challenges in the study of the Earth's ionized upper atmosphere when using incoherent scatter radars (ISRs) is that the measurements often do not match the intrinsic horizontal resolution of the physical phenomena that are being studied. Conventional ISR measurements are ultimately limited in the transverse beam axis direction by the beam width of the radar antenna, which is determined by the diffraction pattern of the antenna. Even for large antennas, the beam width is typically around 1∘. The mismatch between geophysical feature scales and horizontal resolution obtained by a typical ISR antenna is demonstrated in Fig. 1, which shows an image of auroral airglow taken in the magnetic-field-aligned direction. Overlain on the image are the antenna beam diameters of three incoherent scatter radar antennas, namely EISCAT ultra-high frequency (UHF), EISCAT 3D, and Arecibo. It is clear that the auroral precipitation has an appreciable structure on scales smaller than the beam size. A conventional ISR measurement in this case will provide plasma parameters that are averaged over the area of the radar beam, preventing the observation of a small, sub-beam-width-scale structure. Only a radar with an antenna the size of the Arecibo Observatory dish (305 m) would provide an antenna beam width that approaches the scale size of auroral precipitation.

Another measurement challenge for ISRs is temporal sampling of the spatial region of interest. Single-dish radar systems can only measure in one direction at any given time, and the ability to move the beam into another direction depends on the speed at which the antenna can be steered. In addition, there is the minimum integration time required to measure one position. It takes a long time to sample a large horizontal region, and even then, the measurements of different horizontal positions are obtained at different times.

Figure 1An image of auroral optical emission in the magnetic-field-aligned direction, showing the horizontal distribution of the auroral precipitating electron flux. Overlain on the image are the beam widths of the EISCAT UHF, Arecibo, and EISCAT 3D radars, with approximately 0.5∘, 0.16∘, and 1.0∘ beam widths. The image is from the Auroral Structure and Kinetics (ASK) instrument (Ashrafi, 2007), courtesy of Daniel K. Whiter.

In order to increase the spatial resolution of radio measurements without resorting to constructing an extremely large continuous antenna structure, a technique called aperture synthesis imaging can be used (e.g. Junklewitz et al., 2016). It relies on a sparse array of antennas to estimate a radio image with a horizontal resolution equivalent to that of a large antenna. The correlation between the received signals can be used to produce an image of the brightness distribution of the radio source. This technique is widely used in radio astronomy to image the intensity of radio waves originating from different sky positions.

The application of aperture synthesis imaging for radar, i.e. ASRI, has been used in space physics to observe high signal-to-noise ratio targets (Hysell et al., 2009; Chau et al., 2019). There is a good amount of literature on ASRI techniques in two dimensions (range and one transverse beam axis direction) for imaging field-aligned irregularities (e.g. Hysell and Chau, 2012, and references therein). There has also been much research on the imaging of atmospheric and ionospheric features in three dimensions; for example, Urco et al. (2019), who applied it to observations of polar mesospheric summer echoes (PMSE) with the Middle Atmosphere Alomar Radar System (MAARSY); Palmer et al. (1998), who applied it on the middle and upper atmosphere radar in Japan; Yu et al. (2000), who applied it in a simulation study; and Chau and Woodman (2001), who applied it to observations of the atmosphere over Jicamarca. The currently available horizontal resolution of ASRI is around 0.5∘ with Jicamarca, but down to 0.1∘ for strong backscatter (Hysell and Chau, 2012) in the case of field-aligned ionospheric irregularities, and 0.6∘ with MAARSY for PMSE (Urco et al., 2019).

However, there is little literature directly on the incoherent scatter in three dimensions, but some approaches have been made, like Schlatter et al. (2015), who used the EISCAT aperture synthesis imaging array and the EISCAT Svalbard radar to image the horizontal structure of naturally enhanced ion acoustic lines (NEIALs) and Semeter et al. (2009), who interpolated sparse independent Poker Flat Incoherent Scatter Radar (PFISR) measurements to estimate the electron density variation over a 65×60 km area during an auroral event.

In radar imaging, the measurements are in the so-called visibility domain. Ensemble averages of the cross-correlation of complex voltages between two antennas represent a single sample of the visibility (Woodman, 1997; Urco et al., 2018). Throughout this article we will use the term “far field” for the region further away than the Fraunhofer limit of the radar. The region closer than the Fraunhofer limit we will call the “near field”. If the radar target is in the far field of the radar, the visibility domain is related via a Fourier transform to the horizontal brightness distribution or the radio image. Then, the measurements are samples of the Fourier transform of the spatial variation of backscatter strength, or brightness distribution, of the target (Woodman, 1997). The measurements are used to calculate the brightness distribution, or image, of the target.

So far, most of the incoherent scatter radar imaging has been done with a single transmitter and multiple receivers, thereby using a single-input–multiple-output (SIMO) system. The number of measurements and degrees of freedom is here determined by the number of receivers and their relative locations. Instead of using only one transmitter, multiple transmitters can be used when performing radar imaging. This allows one to increase the number of visibilities that can be measured, which can result in improved imaging performance – as long as the signal-to-noise ratio is sufficiently high. This technique is called multiple-input–multiple-output (MIMO) radar (Fishler et al., 2006). The MIMO technique for increasing spatial resolution has recently been demonstrated with the Jicamarca radar, when imaging equatorial electrojet echoes (Urco et al., 2018), and also with the MAARSY radar for imaging PMSE (Urco et al., 2019). The primary technical challenge with MIMO radar is separating the scattering of the signals corresponding to multiple transmitters once they have been received.

EISCAT 3D, from here on referred to as E3D, is a new multi-static incoherent scatter radar that is being built in Norway, Sweden, and Finland (McCrea et al., 2015; Kero et al., 2019). The core transmitting and receiving antenna array will be located in Skibotn, Norway (69.340∘ N, 20.313∘ E). There will be additional bi-static receiver antenna sites in Kaiseniemi, Sweden (68.267∘ N, 19.448∘ E), and Karesuvanto, Finland (68.463∘ N, 22.458∘ E). The core array of E3D will consist of 109 sub-arrays, each containing 91 antennas. The one-way half power full beamwidth (HPBW) or illuminated angle of the core array will be 1∘. On transmission, the array is capable of transmitting up to 5 MW of peak power at a frequency of 233 MHz. Additionally, there are 10 receive-only outrigger antennas around the core array, providing longer antenna spacings that can be used for high-resolution ASRI. Imaging will already be necessary to maintain the perpendicular resolution constant in the transition from EISCAT very high frequency (VHF) and UHF to E3D. It is possible that the EISCAT 3D radar can also be configured as a MIMO system, where the core array is separated into smaller sub-arrays which act as independent transmitters at slightly different locations. During the design phase, Lehtinen (2014) investigated the imaging performance of possible layouts of E3D in the far field. The study, however, does not include the current layout that is being built.

EISCAT 3D will not be able to measure radar echoes from magnetic-field-aligned irregularities, so it will not be possible to assume that the scattering originates from a 2D plane where the radar-scattering wave vector is perpendicular to the magnetic field. All radar imaging will need to be done in 3D and mostly for incoherent scatter. This poses the following two main challenges: 1) the signal-to-noise ratio will, in typical cases, be determined by incoherent scatter, which is much smaller than that used conventionally for ASRI; and 2) there are more unknowns that need to be estimated as, at each range, there is a 2D image instead of a 1D image that needs to be estimated.

For E3D, the Fraunhofer limit is at km, where D≈1.2 km is the longest baseline and λ=1.3 m is the wavelength of the radar. Measurements of the ionosphere are therefore taken in the near field of the radar. Woodman (1997) describes a technique to correct for the curvature in the backscattered field with an analogy of lens focusing. In this study, a different approach has been taken, where the near-field geometry is directly included in the forward model of the linear inverse problem formalism. In this case, it is not possible to resort to frequency domain methods to diagonalize the forward model. This comes at an increase in computational complexity, but this is not prohibitive in terms of computational cost with modern computers.

In this study, we will simulate the radar imaging measurement capabilities of the upcoming EISCAT 3D radar. The study is divided into the following sections. In Sect. 2, we investigate the achievable time and range resolution of E3D, and how they are connected. An expression for the cross-correlations between the received signals, taking into account the near-field geometry, is derived in Sect. 3. Section 4 describes the near-field forward model for radar imaging and describes several numerical techniques for solving the linear inverse problem. This section also includes a study of imaging resolution based on simulated imaging measurements.

In this section, we will calculate the required integration time for a certain range resolution with E3D. The elementary radar imaging measurement is an estimate of the cross-correlation of the scattered complex voltage measured by two antenna modules. The integration time in this case is the minimum amount of time that is needed to obtain a measurement error standard deviation (SD) for the cross-correlation estimate that is equal to a predefined limit. The estimation error of the cross-correlation determines the measurement error for the imaging inverse problem. By investigating the variance of the cross-correlation estimate, using statistical properties of the incoherent scatter signal, we can decouple the problem of the time and range resolution from imaging resolution, allowing us to study the performance of the imaging algorithm with a certain measurement error SD.

Our signal-to-noise calculations will be based on an observation of incoherent scatter from ionospheric plasma, which is the case with the smallest expected signal-to-noise ratio. We have ignored self-clutter, as the combination of the E3D core transmitter illuminating the target and a single receiving sub-array receiver module will inevitably be within a low signal-to-noise ratio regime that is dominated by receiver noise.

We will first deduce an expression for the measurement rate, that is, how many measurements are taken per second. There are the following two factors that determine the maximum rate at which independent observations of the scattering from the ionosphere can be made: (1) the minimum inter-pulse period length, which we set to d∕τp, with d as the duty cycle and τp as the pulse length; and (2) the incoherent scatter decorrelation time, which is inversely proportional to the bandwidth of the incoherent scatter radar spectrum B. The maximum of these two timescales determines the frequency of the independent measurements that can be made as follows:

If a transmitted long pulse is divided into NP bits, the number of measurements per long pulse can be multiplied by NP. In the E region, we can assume that the autocorrelation function is constant for the purpose of estimating the variance. Then, the number of lagged product measurements per transmit pulse is because we also can use measurements with different time lags. For the sake of simplicity, we assume that all lags within a radar transmit pulse are equally informative. This is approximately the case for E-region plasma measured using E3D. The number of measurements per second is then as follows:

Next, we will estimate the number of measurements needed to reduce the measurement error of an average cross-correlation measurement to a certain level. We consider a measurement model in which a measurement m is described by a linear combination of the parameter we want to estimate, , where x and ξ are considered as proper complex Gaussian random variables with zero mean and variance of, respectively, PS and PN. The noise power estimate PN is assumed to have no error. We estimate the signal power with the following:

where the bar denotes complex conjugation. It can then be shown that, in the following:

where ε is the relative SD and K is the number of measurements (Farley, 1969). If we require the correlation function to have a relative uncertainty under a certain level ϵ, e.g. , the equation can be solved for K in order to obtain the number of needed samples as follows:

where SNR is the signal-to-noise ratio. The integration time required to obtain a measurement with a certain level of uncertainty is now the following:

or is written as a function of SNR, as follows:

The received signal power PS can be found by the radar equation as follows:

where Ptx is the transmitted power, Gtx is the transmit and Grx the receive gain, λ is the radar wavelength, σ is the scattering cross section, and Rtx and Rrx are the distance from the scattering volume to the transmitter and receiver (e.g. Sato, 1989). Assuming that the Debye length is much smaller than the radar wavelength, the effective scattering cross section for a single electron in plasma (Beynon and Williams, 1978) is the following:

Here, σe is the Thomson scattering cross section , Ti is the ion, and Te is the electron temperature.

The total scattering cross section can be found by adding up the cross sections of all electrons in the illuminated volume NeV as follows:

The scattering volume can be approximated using a spherical cone as follows:

Here, is the range resolution of the measurement, where is the baud length, r is the range of the volume, and θ is the HPBW angle of the radar. By range, we mean the range from the centre of the core array in Skibotn to the target.

We assume that the noise is constant through the ion line spectrum. The noise power is then given by the following:

where Tsys is the system noise temperature, B is the bandwidth of the incoming ion line, and kB is the Boltzmann constant. The bandwidth we assume to be equal to 2 times the ion thermal velocity times wave number (2vthk) or the inverse of the pulse length , depending on which one is the largest. The ion thermal velocity is given by the following:

where mi is the ion mass, which we set equal to 31 u, corresponding to a mixture of and NO+. These are the two most dominant ion species in the E region (Brekke, 2013). The system noise temperature we set to 100 K. For all bit lengths investigated here, exceeds 2vthk by at least 1 order of magnitude. The bandwidth is therefore independent of the ion composition as long as the measurements are restricted to the E region.

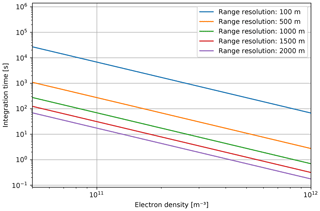

We can now use this to calculate the integration time of the electron density measurements in the E layer at 150 km for different range resolutions. The SD requirement is set at 5 %. The electron and ion temperatures we set to 400 and 300 K, respectively. We assume a monostatic radar with frequency f=230 MHz, HPBW of , and a transmitter with power of 5 MW. The transmitter gain for the core array we set to 43 dB and the receiver gain to 22 dB for one sub-array of the imaging array. The inter-pulse period τIPP is 2 ms, and the long pulse length is 0.5 ms. The results are shown in Fig. 2.

Figure 2Integration time of targets in the E region observed using the E3D core for transmitting and a single 91 antenna element module for receiving.

The figure shows that the integration time decreases with increasing electron density and decreasing range resolution. This confirms the expected trade-off between range and time resolution. If the electron density is not too low, a time resolution of a few seconds is possible. This, however, assumes a relatively low range resolution of 1000–2000 m, which still provides some useful information about the E-region plasma. When keeping a constant SD, an enhanced electron density can be used to improve either the time or range resolution.

When using MIMO imaging, the core array is divided into multiple independent groups when transmitting. This provides more baselines and increases the maximum antenna separation. In this case, the imaging resolution will be improved by having a larger aperture. One of the challenges in this case will be to separate the signals from different transmitters in the case of overspread radar targets. We assume that separate transmitters operate at the same frequency, and that the transmitted signals are distinguished using radar-transmit coding. This can be achieved in practice by using a different pseudorandom transmit code on each transmit group (Sulzer, 1986, Vierinen et al.; in preparation). Then, the transmit power is spread over the transmitters. However, since the scattering volume increases and then includes more scatterers, the power adds up again. Because of the smaller antenna area, the transmit gain must be divided by the number of transmitters. Additionally, there could be cross-coupling between antennas, which might cause buffer zones between transmitters. Then, the antenna area and gain decrease furthermore. In conclusion, the integration time for MIMO will at least be the number of transmitters times the integration time for SIMO.

The calculations do not include echoes other than those from incoherent scatter or enhancements other than electron density. In the case of PMSE (e.g. Urco et al., 2019) and NEIALs (e.g. Grydeland et al., 2004; Schlatter et al., 2015), the echo is significantly stronger than for incoherent scatter. These enhancements will also make shorter integration times available and will be more promising candidates for the use of MIMO imaging.

In this section, we calculate the correlation between signals from two different baselines that are transmitter–receiver pairs. The aim is to determine which baselines provide information about the ionospheric features of a certain scale size and to determine how the near-field geometry affects this correlation.

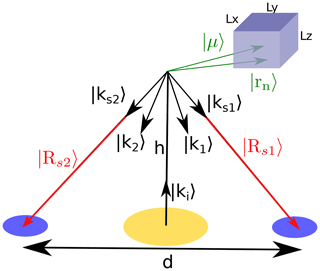

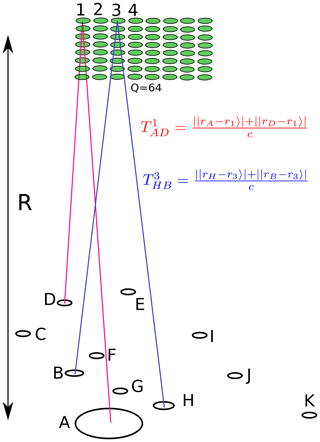

We consider a case with one transmitter and two receivers placed equidistant from the transmitter in every direction. This configuration is shown in Fig. 3.

Figure 3Set-up for calculating the cross-correlation function. The box represents an ionospheric feature with size L. The figure is based on the assumptions at the end of Sect. 2 but is not to scale.

Let the transmitter be placed in the origin and the receivers at |P1〉 and |P2〉. Let the transmitter transmit a signal of the form V0=Keiωt, where K is a time-independent constant, ω is the transmit frequency, and t is time. The electrical potential induced to the receiver antenna r then becomes the following:

where is the time delay from the transmitter to scatterer n and is the time delay from scatterer n to receiver r. Here, |Ri〉 is the vector from the transmitter to the centre of the illuminated plasma volume, |Rsr〉 is the vector from the centre of the plasma volume to receiver r, and |rn〉 is the vector from the centre of the plasma volume to scatterer n, like in Fig. 3. N is the number of scatterers in the scattering volume, and G∈ℜ is the scattering gain, which includes the free-space path loss. The gain may be dependent on the position of the scatterer, the scatterer itself, and on time, but we neglect these dependencies. We also neglect that the distance to the scatterer varies between the transmitter–receiver baselines. This has an order of magnitude of ≈10 m, which is lower than the best available range resolution.

The cross-correlation function for time lag τ=0 can then be written as follows:

By taking the first-order Taylor approximation of the time delays around , we find that and , where the hat denotes a unit vector. Carrying out this approximation is essentially the same as assuming plane waves. When also keeping the second-order terms, the near-field correction described by Woodman (1997) can be deduced. We note that , which is the Bragg scattering vector. Equation 16 can then be written as follows:

We assume that the scatterer positions are independent, identical, and normally distributed with a mean |μ〉 and covariance L that is like a Gaussian blob, as follows:

We use the definition of expectation and then solve the integral. Since the positions of the scatterers are assumed to be independent, the expectation becomes zero when . In addition, in a first-order approximation, because D≪h. The result then becomes the following:

The normalized cross-correlation function, in the following:

becomes the following:

We note that if the transmitter(s) and all receivers lie in a horizontal plane, then the vertical components of the Bragg scattering vectors are exactly equal and make the vertical components of |μ〉 and L, namely μz, Lxz, Lyz, and Lzz arbitrary. This means that the horizontal resolution is independent of the vertical resolution.

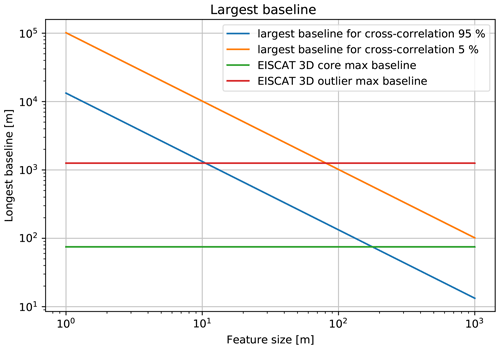

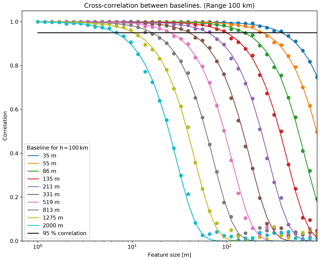

Equation 20 for and is plotted in Fig. 4, where L is the extent of the ionospheric feature in all dimensions, and I is the identity matrix. Figure 4 also shows the numerically simulated normalized correlation based on a direct simulation of Eq. (16), which does not significantly differ from the analytical expression. The plot shows that, for a height of 105 m (100 km) and a baseline of 211 m, the correlation crosses 0.95 at a blob size of 70 m and 0.5 at a blob size of 250 m. At 100 km height, the radar beam of E3D is about 1800 m wide. This means that, when considering a maximum baseline of 200 m and a ionospheric feature that is larger than 250×250 m, the addition of longer baselines contributes less in terms of recovering the image. The E3D core has a maximum baseline of 75 m. We can simplify these calculations by setting the magnitude of the desired least cross-correlation to ℛ, as follows:

We assume that the scatterers have equal variance in the x and y direction () and that all directions are uncorrelated ( = 0). By using the geometry as in Fig. 3, we obtain the following:

By combining Eqs. 20, 21, and 22, we obtain the following:

which can be rewritten to an expression for the feature size L as follows:

For short baselines or long distances D≲h∕5, the expression can be simplified. Thus, we solve for the baseline D and obtain the following:

The resulting expression shows how long the baseline can be to still make a contribution to recovering the feature. Equation 24 is plotted in Fig. 5.

A longer baseline can contribute to recover smaller features, but the improvement will decrease the longer the baseline is. For example, if we want to resolve a feature with a size of 100 m, baselines up to 200 m have large contributions to the imaging. Adding longer baselines will improve the resolving less and stop slightly above 1 km. This means that the improvement of the imaging quality by including the E3D outriggers will be large for the closest outriggers. The signal received by furthest ones will correlate little with the signal received by the core. From Fig. 5, we see that the correlation in the longest E3D baseline of 1.2 km is about 5 %. This means that if one wants to use E3D to invest in ionospheric features with an extent of around 100 m at 100 km range, there is no need to add longer baselines because the furthest outriggers are far enough. Also, it could be possible to improve the imaging quality in this example by having more baselines with lengths of around 100 m. This is one reason to use the E3D core as multiple transmitters to add new baselines.

When inserting ℛ=0.01, Eq. (24) shows that the diffraction limit is the same as for planar scatter under the assumptions mentioned in the deduction.

Baselines between the receiver sites in Skibotn, Karesuvanto, and Kaiseniemi are so long that they cannot be used for imaging, as signals will not be correlated anymore. The baseline cross-correlation calculations also do not claim that the image is well recovered if the largest baseline is included. This is more dependent on which baselines are used, how they are distributed, and how the image is recovered.

We consider a radar that may have single or multiple inputs (transmitters), and multiple outputs (receivers; SIMO or MIMO). The radar illuminates a plasma volume at range R with thickness dr and inside of the one-way HPBW θ. We imagine that the volume is divided into M parts or pixels (see Fig. 6).

Figure 6Example of multiple-input–multiple-output (MIMO) radar and plasma volume in its line of sight.

The signal transmitted from transmitter A and spread by plasma element/pixel q causes a voltage fluctuation in the receivers. The voltage fluctuation of receiver D, due to transmitter A and plasma pixel q, is denoted as , where VA is the amplitude of the signal sent by transmitter A, F is a function of the received signal amplitude, f is the radar-transmitting frequency, and is the time delay of the signal due to travelling from transmitter A, via pixel q, to receiver D (see Fig. 6). The correlation between the signals from two different baselines, AD and HB, due to an infinitesimal scattering volume dV, can be described as follows:

where Pt is transmit power, Gt is transmit gain, Gr is receiver gain, λ is the radar wavelength, σp is the scattering cross section for a single electron given by Eq. (9), ne is the electron density, Rt is the distance from transmitter to the scattering volume, Rr is the distance from the scattering volume to the receiver, and |r〉 is the position of the scattering volume. We integrate over the whole scattering volume to determine the whole measurement. At a certain time lag, we obtain the correlation for the range of interest. We assume that the gains are constant inside of the radar beam and zero otherwise and neglect the dependency of Rr and Rt on the exact position of the scattering volume. The correlation can then be written as follows:

We assume that the electron density (or brightness) distribution can be written as a sum of its discretized parts with constant electron density. We neglect variations in the phase shift inside of one part. The integral can then be replaced with a sum as follows:

The first factor here is constant and can be normalized away. The number of discretizations Q is still needed in the simulations if the original image has a resolution other than the reconstructions. The series of measurements can be written on a matrix form, as follows:

Here, becomes the following:

and is the theory matrix, and is the complex normally distributed noise vector.

Sometimes it is more convenient to have the cross-correlations in a matrix form. The measurements can be transferred from the one form to the other simply through reshaping the vector |m〉 to a matrix M, or the opposite, as follows:

To obtain an estimate of the intensities of the plasma in the image, Eq. (28) has to be inverted so that, in the following:

where B is a matrix that reconstructs the image |x〉 from the measurements |m〉. When inserting Eq. (28) into Eq. (30) and neglecting noise, we obtain . We would like the reconstructed image to be as close to reality as possible, and so, taking would give a perfect solution. However, since we have an underdetermined problem, A cannot be directly invertible. Other attempts are therefore needed.

4.1 Matched filter

When the scatterers are behind the Fraunhofer limit in the far field, Eq. (28) represents a Fourier transform. One approach to reinstating the original image would be the inverse Fourier transform, which can be represented as the Hermitian conjugate of the theory matrix, B=AH like a matched filter (MF). Unfortunately, the samples of the Fourier-transformed image that are the visibilities are sparsely and incomplete scattered, and the problem becomes underdetermined (Hysell and Chau, 2012; Harding and Milla, 2013). The approach can be interpreted as steering the beam after the statistical averaging and is therefore also called beam forming.

4.2 Capon method

Another approach is the Capon method (Palmer et al., 1998). The purpose of this method is to minimize the intensities in all directions other than the direction of interest, i.e. to minimize the side lobes of the antenna array in directions with interfering sources. The result is to invert the matrix of correlation measurements M (Palmer et al., 1998). In order to continue using the notation in this article, M−1 is reshaped back to a vector . The estimated intensities from the Capon method can then be written as follows:

where the fraction denotes element-wise division.

4.3 Singular value decomposition

The problem in Eq. (28) is overdetermined if the number of unknowns, i.e. the number of discretizations, is less than the number of measurements. This can be the case if we solve for an imaging resolution that is low enough. We can then use the method of least squares to solve it, obtaining the following:

One can also use the singular value decomposition (SVD) on the theory matrix, A=U S VH, where S is a diagonal matrix containing the singular values that are square roots of the eigenvalues of AH A, V contains the normalized eigenvectors of AH A, and U contains the normalized eigenvectors of A AH. The inversion matrix B can then be written as follows:

which, as can be shown, still gives the same solution as ordinary least squares but with increased numerical accuracy (Aster et al., 2013). Because of the inversion of the singular values, the eigenvectors corresponding to the smallest values contribute the most to the variance of the solution and make the solution sensitive to noise. Also, the problem can be rank deficient, i.e. that several columns in the theory matrix are nearly linear dependent on each other. The problem is then said to be ill-conditioned or multicollinear.

In such cases, some singular values will be practically zero, and the solution may be hidden in the noise. To prevent the noise sensitivity, the solutions can be regularized. This makes the reconstruction biased towards smoothness and zero but less noisy (Aster et al., 2013). We here consider two regularization techniques, namely truncated SVD (TSVD) and Tikhonov regularization. In TSVD, the inverse of the singular values below some limit is set to zero. The eigenvectors corresponding to the smallest singular values will then not contribute to the result. These eigenvectors often contain high-frequency components. Ignoring them makes the solution smoother. Tikhonov regularization or ridge regression can be done in several ways. In this article, we use zeroth-order Tikhonov regularization, where the singular values si are inverted with the following:

where α is a regularization parameter. By using SVD, we also can obtain the variance of the estimates. For pure least squares, it is , and for regularized least squares it is as follows:

4.4 CLEAN

The CLEAN algorithm is another attempt at reducing side lobes. It is based on the matched-filter approach but iteratively finds the real structure in the field of view (Högbom, 1974). It supposes a source where the image reconstructed by the matched filter is brightest. The source is added to an image that only contains the suspected sources, which will be the reconstructed image. Then, the measurements that the radar would have measured if the reconstructed image were the true image are subtracted from the real measurements, and the next suspected source is found. This procedure is repeated until there are no clear sources left in the measurements (Högbom, 1974). The method is a special case of compressed sensing and requires an assumption on how the measured sources appear (Harding and Milla, 2013). For sparse sources, a Dirac delta function could be appropriate but may lead to sparse solutions.

4.5 Performance of the radar layouts

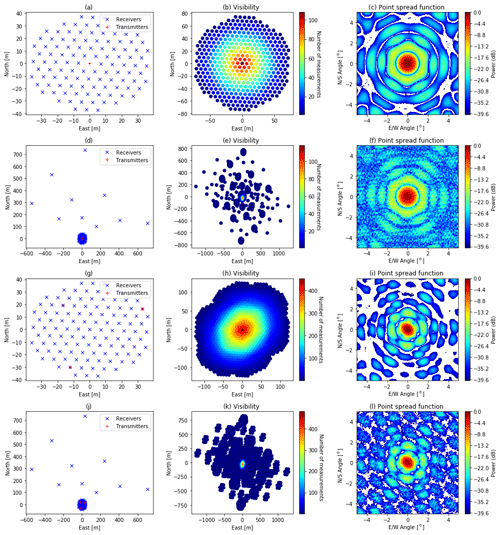

We considered different radar layouts. The layouts, together with plots of the visibilities and the point spread function, are shown in Fig. 7.

Figure 7E3D transmitter–receiver layouts considered. Panels (a), (d), (g), and (j) show the layouts, panels (b), (e), (h), and (k) show the visibilities, and panels (c), (f), (i), and (l) show the point spread function in the near field at 100 km range. The point spread function was calculated by reconstructing a 1×1 one-valued central pixel in a 129×129 zero-valued pixel image with a matched filter. Panel (a–c) uses the whole core array as a single transmitter and receives with each of the 109 antenna groups in the core array. Panel (d–f) also includes the interferometric outriggers. In panel (g–i) only the core is used, but it is divided into three transmitters. Finally, panel (j–l) uses both the outriggers and multiple transmitters.

When considering a layout with multiple transmitters and multiple receivers (MIMO), it is assumed that the signals from different transmitters can be distinguished. This increases the number of virtual receivers and thereby the visibilities become more widespread and denser (see Fig. 7). However, using multiple transmitters increases the integration time, as described in Sect. 2. We note that when receiving with the outriggers, the main beam becomes narrower. Also, there are gaps in the visibilities. This is due to the sparse locations of the outriggers and makes the point spread function look more irregular (cf. Fig. 7c and f). With multiple transmitters, the main beam becomes even narrower (cf. Fig. 7c and i). When both using multiple transmitters and receiving outriggers, the gaps in the visibility domain are partially filled, and the side lobes are clearly reduced. The MIMO layout used here could possibly be improved by using positions of the transmitters so that gaps in the visibility are filled more.

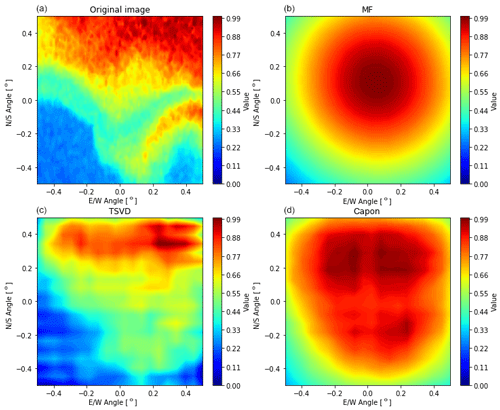

Figure 8Comparison of reconstructions. All antennas are transmitting together, like one transmitter, but receiving separately. The intensities are normalized to be between 0 and 1. Panel (a) shows the true image. The others show the reconstructed image, namely the matched filter (b), TSVD (c), and Capon (d). For TSVD, the singular values below 0.02 of the maximum singular value were ignored.

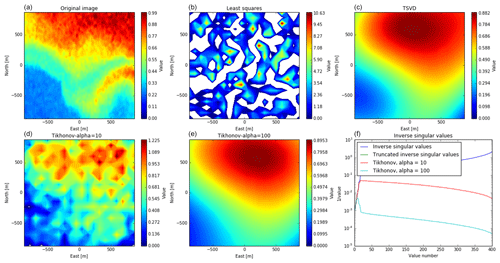

Figure 9Comparison of reconstructions, using SVD for the SIMO case only, using the core antennas. Panel (a) shows the true image. Panel (f) shows the inverse of the singular values of the theory matrix. The other figures show reconstructions with different weightings of the singular values. Panel (b) has no weighting and corresponds to the ordinary least squares method. In panel (c), the singular values below 2 % of the largest singular value are ignored/truncated away. In panels (d) and (e), the inverse of the low singular values are damped, like in Eq. (33), with regularizing parameters α of 10 and 100, respectively. The reconstructions consider an image resolution of 20×20 pixels, at 100 km altitude, where one pixel corresponds to 100×100 m. White spaces in the colour plots correspond to negative values.

4.6 Performance of the imaging techniques

We simulate E3D measurements using Eq. (28) with the presented antenna configurations. For the original image, we use part of Fig. 1. A section of 97×97 pixels was cut out of the figure, and the greyscale values were scaled to the range between 0 and 1. From the measurements, we reconstructed the images with the matched filter (MF), Capon, truncated singular value decomposition (TSVD) and CLEAN techniques. For TSVD, the singular values below 0.02 of the maximum singular value were truncated. This value gives a good compromise of the resolution and low noise level (regularization). For CLEAN, we used a gain of 1 and a threshold of 1.36 times the average value. We tried both Dirac delta and Gaussian functions in the CLEAN kernel. In Capon filtering, it so happens that the correlation measurement matrix M is singular. In such cases, TSVD is used to invert the matrix. This truncation ignores singular values that are less than 0.03 % of the largest singular value.

Noise is added to all cross-correlations, which corresponds to white complex Gaussian noise with a zero mean and 5 % SD in each receiver. The noise is equal for every reconstruction of a single resolution but varies between reconstruction in different resolutions. The results for the SIMO layout is shown in Fig. 8.

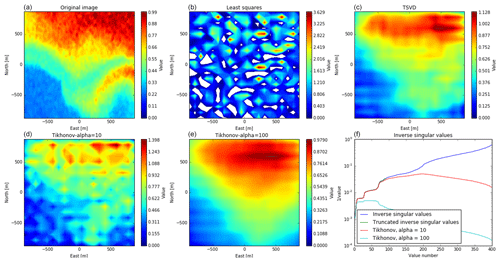

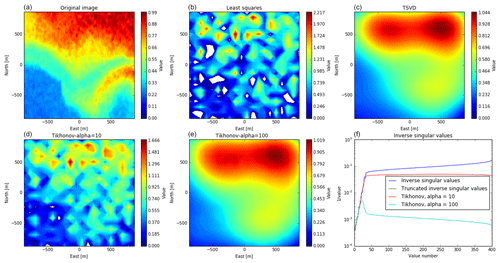

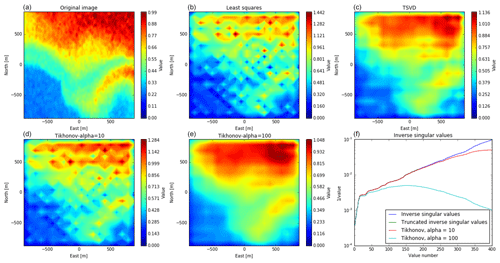

Of the reconstruction techniques, TSVD clearly gives the best results. It is also the only method that fairly reproduces the shape of the true image. Capon also partly reproduces the shape but far worse than TSVD. The matched filter apparently only reproduces something similar to the point spread function. The performance of CLEAN (not shown here) is accordingly poor. In terms of calculation time, CLEAN is the slowest algorithm followed by TSVD. MF and Capon are relatively fast. These differences become stronger when also considering MIMO. Most of the computation time of TSVD is used to invert the theory matrix. Since the theory matrix only varies from experiment to experiment, it must only be inverted once and can be saved afterwards. The computation time, therefore, is reduced to a simple matrix multiplication, and it is not considered as a problem for the real radar. We therefore concentrate on images reconstructed with techniques using SVD. Here, we compared ordinary least squares, TSVD with truncating singular values under 2 %, like before, and Tikhonov regularization with the regularizing parameter α=10 and 100. These results are shown in Figs. 9 to 12 for the four layouts, shown in Fig. 7a, d, g and j, which are SIMO without and with outriggers and one MIMO case without and with outriggers, respectively.

Figure 10Comparison of reconstructions, using SVD for the SIMO case, including the outriggers. The plots correspond to Fig. 9.

For all layouts, at a considered resolution of 20×20 pixels in the radar main beam (here, 1 m), the image reconstruction with the method of least squares is very noisy. The singular values of A vary over several orders of magnitude, which is a sign that columns in A are linearly dependent on each other. The regularized solutions look considerably better, with a regularization parameter of 10, and the recovered images are still a bit noisy but with stronger regularization as the images become smoother and closer to the original.

When comparing the strongly regularized images (panels c and e in Figs. 9–12), we see that, when including the outriggers, the images contain stripes. This is probably because the visibility in some regions has gaps (see Fig. 7). When only considering the core array, there are no gaps other than the spacing between antennas. The recovered images without the outriggers look smoother than those including the outriggers, but when including the outriggers, more details of the original image can be seen. Also, in the MIMO case with outriggers, the feature in the southeast can be seen in the reconstruction. For the other layouts, it is less visible and not clearly distinguishable from the main feature in the north.

Figure 11Comparison of reconstructions, using SVD for the MIMO case only, using the core antennas. The plots correspond to Fig. 9.

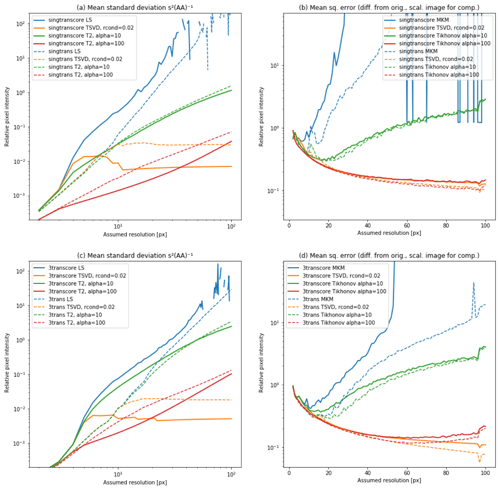

The uncertainty of the reconstruction itself is given by the variance of the recovered image; see Eq (34). The mean SD for the different layouts and reconstruction techniques is shown in Fig. 13. The plots of the least square variance are comparable to the variance plots in Lehtinen (2014). We note that, while Lehtinen (2014) investigates far-field imaging, Fig. 13 shows near-field imaging.

Figure 12Comparison of reconstructions, using SVD for the MIMO case, including the outriggers. The plots correspond to Fig. 9.

By using the SD, we neglect errors introduced by the discretization because they are not included in the variance. This assumption is true if the true image has the same resolution as the reconstruction, but that is only for the case of what Kaipio and Somersalo (2010) call an “inverse crime”. In reality, the target of E3D, the electron fluctuations in the ionosphere, is not discrete with steps of several metres. Also, by regularization, bias is introduced to the solution, which the variance does not take into account. Therefore, we also used the similarity with the true image for uncertainty estimation. As a measure, we used the mean square deviation, , so that a low value of s means great similarity. Because the original image and the reconstruction have different resolutions, the smallest is scaled up. The scaling was done by Lanczos resampling with a cos 2 kernel. A drawback with the mean square error (MSE) is that it could be influenced by the target, while the variance is not. The mean SD and the similarity to the original image are shown in Fig. 13 for all layouts considered here and reconstruction resolutions up to 100×100 pixels.

Figure 13Comparison of regularization techniques. Mean SD of the result is shown in panels (a) and (c). Panels (b) and (d) show the similarities between the recovered image and the true image. Both are shown relative to the mean intensity of the original image. Panels (a) and (b) show the relative SD and the similarity for the SIMO cases and the lower plots for the MIMO cases. The solid lines show recoveries when only using the core array, and the results with dashed lines include the outriggers. The line colour shows the type of regularization; blue is not regularized (ordinary least squares), orange is TSVD (including only singular values higher than 2 % of the greatest singular value), and green and red lines are Tikhonov damped singular values with α=10 and 100, respectively.

The variance of the recovered image is strongly increasing with the resolution we assume/would want the radar target to have. Image recovering with LS gives the highest variance for all layouts. The reason is the multicollinearity in the theory matrix, which amplifies the noise in the recovered image. At small resolutions, the variances are equal for the different reconstruction techniques but diverge when the regularization starts to influence the results. This divergence happens later, when including the outrigger antennas, and later still, when using MIMO rather than SIMO. For high resolutions, the variance of the TSVD solution is the lowest. However, since bias is introduced by regularizing the solution, this does not necessarily mean that the TSVD solution is the best.

The mean square error (MSE) of the recovered images is, in general, higher than their mean variance. For small resolutions, it decreases with increasing resolution until it reaches a bottom point. The error then increases again. For LS and Tikhonov with α=10, the minimum is at 10–20 pixels per direction. When including the outriggers, the minimum is at a later stage. Also, the error is lower. We also note the dip of the error at 97×97 pixels. This is exactly where the resolution of the recovered image matches the resolution of the original image, so these dips are the effect of inverse crimes and, therefore, not transferable to the real radar. For high resolutions, the MSE is higher for MIMO than for SIMO when using Tikhonov. This could indicate that, for MIMO, more regularization is required.

The original image contains values between 0 and 1 m−3, with a mean of about 0.5 m−3. In reality, the values will be far higher, and the uncertainty will increase accordingly. Therefore, the SD and the MSE are plotted relative to the mean value of the original image. In order to have a good recovery, the relative mean error should be below 1 and, if possible, far below that. All regularized solutions would fulfil this criterion, but the two strongest regularizations clearly have the lowest MSE. The minimum of MSE seems to be somewhere between 60×60 pixels for MIMO and 90×90 pixels for SIMO. In practice, the image reconstructions for higher resolutions look very similar to low resolution (20×20), without adding more details but with a better quality reconstructed image.

In the MSE plots, the curves flatten out to a minimum relative MSE at about 10 %. At 100 km range, 20×20 pixels correspond to a resolution of around 90×90 m. The TSVD indicates that the recovered image with MIMO could be improved with stronger Tikhonov regularization, but this has not been investigated.

The MSE of TSVD does not decrease significantly from SIMO to MIMO. Therefore, it seems that there is little gain in using the MIMO layouts considered here, as compared to SIMO. However, the feature in the southeastern part of the image in Figs. 10 and 12 becomes clearer with MIMO. For other targets, these results may look different. When comparing the point spread functions in Fig. 7, it could be that the MIMO configuration is better for point-like targets, like space debris or meteors, but this is beyond the scope of this article.

In this article, the MIMO approach to ISR and E3D has only been treated superficially. There are still some questions that must be answered. To distinguish between the transmitters, we assumed code diversity. However, there is a need to study how well the signals can be distinguished. This can influence the possible number of transmitters. The placing of the transmitters was not investigated here; the example in this article is a simple proposal. At the same time, the transmitter locations can have a great influence on the visibility coverage. Also, the SNR and integration time calculations for MIMO would need to be investigated more thoroughly.

In this article, we have studied the temporal and spatial resolution of the upcoming E3D radar in the case of aperture synthesis radar imaging, primarily focusing on the feasibility of imaging the incoherent scatter radar return from the E region. The most up-to-date radar design specifications at the time of writing this article was used as a basis of this study.

We find that the range and time resolutions are dependent on each other. When keeping the uncertainty level constant, a better range resolution goes on the cost of the time resolution. With an increase in the electron density, the resolution in time and/or range can be improved without increasing the noise level. Under normal conditions in the E layer (Te≈400 K, Ti≈300 K, ), with a desired integration time of 10 s, the achievable range resolution is slightly more above 1500 m.

The horizontal (imaging) resolution depends on the radar layout and the imaging technique. The imaging techniques that were evaluated were matched filter, least squares (using singular value decomposition without and with regularization), Capon, and CLEAN. Of these techniques, only regularized least squares gave satisfactory results. The two regularization techniques of either truncating or damping of the inverse singular values both worked and gave similar results.

These image reconstructions can be reduced to a simple matrix multiplication by saving the inverted theory matrix. Regularized SVD is therefore among the fastest reconstruction techniques amongst the ones evaluated. With Tikhonov regularization with a damping coefficient of 100, or truncating away singular values below 2 % of the largest value, the relative error of the recovered image can go down to 10 %. The resolution of the recovered image is about 60×60 pixels; at a 100 km range this corresponds to 30×30 m, but features smaller than 90×90 m will be blurred out.

The simulation results show that using the outriggers increases the imaging accuracy. Dividing the core array into multiple transmitters to obtain a MIMO system seems to increase the imaging resolution if the target is smooth. MIMO also has the drawback that it needs stronger signals or more integration time to keep the same measurement accuracy as SIMO. However, this needs further investigation as MIMO may be useful for very bright targets, such as PMSE, and point-like targets, like space debris or meteors, but the latter needs further investigation.

We conclude that radar imaging with EISCAT 3D is feasible.

The programming code is available from the corresponding author upon request.

JS carried out the radar simulations and calculations and prepared the paper. JV suggested the topic and supervised the project. JMU, BG, and JLC gave advice on decorrelation and on imaging. All authors discussed the work and participated in preparing the paper.

Juha Vierinen is an editor on the editorial board of the journal for this special issue.

This article is part of “Special Issue on the joint 19th International EISCAT Symposium and 46th Annual European Meeting on Atmospheric Studies by Optical Methods”. It is a result of the 19th International EISCAT Symposium 2019 and 46th Annual European Meeting on Atmospheric Studies by Optical Methods, Oulu, Finland, 19–23 August 2019.

Johann Stamm thanks Harri Hellgren for providing information about EISCAT 3D design. The EISCAT association is funded by research organizations in Norway, Sweden, Finland, Japan, China, and the United Kingdom.

This research has been supported by the Tromsø Science Foundation (as part of the Radar Science with EISCAT 3D project).

This paper was edited by Andrew J. Kavanagh and reviewed by two anonymous referees.

Ashrafi, M.: ASK: Auroral Structure and Kinetics in action, Astron. Geophys., 48, 35–37, https://doi.org/10.1111/j.1468-4004.2007.48435.x, 2007. a

Aster, R. C., Borchers, B., and Thurber, C. H.: Parameter Estimation and Inverse Problems, Academic Press, Waltham, 2 edn., 2013. a, b

Beynon, W. and Williams, P.: Incoherent scatter of radio waves from the ionosphere, Rep. Prog. Phys., 41, 909–947, 1978. a

Brekke, A.: Physics of the upper polar atmosphere, Springer, Heidelberg, 2 edn., 2013. a

Chau, J. L. and Woodman, R. F.: Three-dimensional coherent radar imaging at Jicamarca: comparison of different inversion techniques, J. Atmos. Sol.-Terr. Phys, 63, 253–261, 2001. a

Chau, J. L., Urco, J. M., Avsarkisov, V., Vierinen, J. P., Latteck, R., Hall, C. M., and Tsutsumi, M.: Four-Dimensional Quantification of Kelvin-Helmholtz Instabilities in the Polar Summer Mesosphere Using Volumetric Radar Imaging, Geophys. Res. Lett., 47, 1–10, https://doi.org/10.1029/2019GL086081, 2019. a

Farley, D. T.: Incoherent Scatter Correlation Function Measurements, Radio Sci., 4, 935–953, https://doi.org/10.1029/RS004i010p00935, 1969. a

Fishler, E., Haimovich, A., Blum, R. S., Cimini, L. J., Chizhik, D., and Valenzuela, R. A.: Spatial diversity in radars-Models and detection performance, IEEE Trans. Signal Process., 54, 823–838, 2006. a

Grydeland, T., Blixt, E. M., Løvhaug, U. P., Hagfors, T., La Hoz, C., and Trondsen, T. S.: Interferometric radar observations of filamented structures due to plasma instabilities and their relation to dynamic auroral rays, Ann. Geophys., 22, 1115–1132, https://doi.org/10.5194/angeo-22-1115-2004, 2004. a

Harding, B. J. and Milla, M.: Radar imaging with compressed sensing, Radio Sci., 548, 582–588, 2013. a, b

Hysell, D. and Chau, J.: Aperture Synthesis Radar Imaging for Upper Atmospheric Research, in: Doppler Radar Observations – Weather Radar, Wind Profiler, Ionospheric Radar, and Other Advanced Applications, edited by Bech, J. and Chau, J. L., pp. 357–376, IntechOpen, Rijeka, 2012. a, b, c

Hysell, D. L., Nossa, E., Larsen, M. F., Munro, J., Sulzer, M. P., and González, S. A.: Sporadic E layer observations over Arecibo using coherent and incoherent scatter radar: Assessing dynamic stability in the lower thermosphere, J. Geophys. Res., 114, https://doi.org/10.1029/2009JA014403, 2009. a

Högbom, J. A.: Coherent radar imaging with Capon's method, Astrophys. Suppl., 15, 417–426, 1974. a, b

Junklewitz, H., Bell, M. R., Selig, M., and Enßlin, T. A.: RESOLVE: A new algorithm for aperture synthesis imaging of extended emission in radio astronomy, Astron. Astrophys., 586, A76, https://doi.org/10.1051/0004-6361/201323094, 2016. a

Kaipio, J. P. and Somersalo, E.: Statistical and Computaional Inverse Problems, Springer, New York, 2010. a

Kero, J., Kastinen, D., Vierinen, J., Grydeland, T., Heinselman, C. J., Markkanen, J., and Tjulin, A.: EISCAT 3D: the next generation international atmosphere and geospace research radar, in: Proceedings of the First NEO and Debris Detection Conference, edited by Flohrer, T., Jehn, R., and Schmitz, F., ESA Space Safety Programme Office, Darmstadt, 2019. a

Lehtinen, M. S.: EISCAT_3D Measurement Methods Handbook, Sodankylä Geophysical Observatory Reports, Sodankylän geofysiikan observatorio, Sodankylä, http://jultika.oulu.fi/Record/isbn978-952-62-0585-4 (last access: 23 January 2019), 2014. a, b, c

McCrea, I., Aikio, A., Alfonsi, L., Belova, E., Buchert, S., Clilverd, M., Engler, N., Gustavsson, B., Heinselman, C., Kero, J., Kosch, M., Lamy, H., Leyser, T., Ogawa, Y., Oksavik, K., Pellinen-Wannberg, A., Pitout, F., Rapp, M., Stanislawska, I., and Vierinen, J.: The science case for the EISCAT_3D radar, Prog. Earth Planet. Sci., 2, 21, 2015. a

Palmer, R. D., Gopalam, S., Yu, T.-Y., and Fukao, S.: Coherent radar imaging with Capon's method, Radio Sci., 33, 1585–1598, 1998. a, b, c

Sato, T.: Radar principles, in: Middle atmosphere program – Handbook for MAP, edited by Fukao, S., International Council of Scientific Unions, Kyoto, https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19910017301.pdf (last access: 6 October 2020), 1989. a

Schlatter, N., Belyey, V., Gustavsson, B., Ivchenko, N., Whiter, D., Dahlgren, H., Tuttle, S., and Grydeland, T.: Auroral ion acoustic wave enhancement observed with a radar interferometer system, Ann. Geophys., 33, 837–844, 2015. a, b

Semeter, J., Butler, T., Heinselman, C., Nicolls, M., Kelly, J., and Hampton, D.: Volumetric imaging of the auroral atmosphere: Initial results from PFISR, J. Atmos. Sol.-Terr. Phys, 71, 738–743, https://doi.org/10.1016/j.jastp.2008.08.014, 2009. a

Sulzer, M. P.: A radar technique for high range resolution incoherent scatter autocorrelation function measurements utilizing the full average power of klystron radars, Radio Sci., 21, 1033–1040, 1986. a

Urco, J. M., Chau, J. L., Milla, M. A., Vierinen, J. P., and Weber, T.: Coherent MIMO to Improve Aperture Synthesis Radar Imaging of Field-Aligned Irregularities: First Results at Jicamarca, IEEE Trans. Geosci. Remote Sens., 56, 2980–2990, 2018. a, b

Urco, J. M., Chau, J. L., Weber, T., and Latteck, R.: Enhancing the spatiotemporal features of polar mesospheric summer echoes using coherent MIMO and radar imaging at MAARSY, Atmos. Meas. Tech., 12, 955–969, 2019. a, b, c, d

Woodman, R. F.: Coherent radar imaging: Signal processing and statistical properties, Radio Sci., 32, 2373–2391, 1997. a, b, c, d

Yu, T.-Y., Palmer, R. D., and Hysell, D. L.: A simulation study of coherent radar imaging, Radio Sci., 35, 1129–1141, https://doi.org/10.1029/1999RS002236, 2000. a